Hewlett-Packard’s ambitious plan to reinvent computing will begin with the release of a prototype operating system next year.By Tom Simonite on December 8, 2014Why It MattersU.S. data centers consumed 91 billion kilowatt-hours of electricity in 2013—twice as much as all the households in New York City—according to the Natural Resources Defense Council.

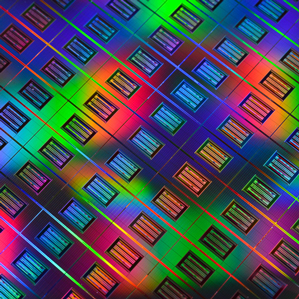

Closeup of HP Memristor devices on a 300 millimeter wafer. Hewlett-Packard will take a big step toward shaking up its own troubled business and the entire computing industry next year when it releases an operating system for an exotic new computer. The company’s research division is working to create a computer HP calls The Machine. It is meant to be the first of a new dynasty of computers that are much more energy-efficient and powerful than current products. HP aims to achieve its goals primarily by using a new kind of computer memory instead of the two types that computers use today. The current approach originated in the 1940s, and the need to shuttle data back and forth between the two types of memory limits performance. “A model from the beginning of computing has been reflected in everything since, and it is holding us back,” says Kirk Bresniker, chief architect for The Machine. The project is run inside HP Labs and accounts for three-quarters of the 200-person research staff. CEO Meg Whitman has expanded HP’s research spending in support of the project, says Bresniker, though he would not disclose the amount. The Machine is designed to compete with the servers that run corporate networks and the services of Internet companies such as Google and Facebook. Bresniker says elements of its design could one day be adapted for smaller devices, too. HP must still make significant progress in both software and hardware to make its new computer a reality. In particular, the company needs to perfect a new form of computer memory based on an electronic component called a memristor (see “Memristor Memory Readied for Production”). A working prototype of The Machine should be ready by 2016, says Bresniker. However, he wants researchers and programmers to get familiar with how it will work well before then. His team aims to complete an operating system designed for The Machine, called Linux++, in June 2015. Software that emulates the hardware design of The Machine and other tools will be released so that programmers can test their code against the new operating system. Linux++ is intended to ultimately be replaced by an operating system designed from scratch for The Machine, which HP calls Carbon. Programmers’ experiments with Linux++ will help people understand the project and aid HP’s progress, says Bresniker. He hopes to gain more clues about, for example, what types of software will benefit most from the new approach. The main difference between The Machine and conventional computers is that HP’s design will use a single kind of memory for both temporary and long-term data storage. Existing computers store their operating systems, programs, and files on either a hard disk drive or a flash drive. To run a program or load a document, data must be retrieved from the hard drive and loaded into a form of memory, called RAM, that is much faster but can’t store data very densely or keep hold of it when the power is turned off. HP plans to use a single kind of memory—in the form of memristors—for both long- and short-term data storage in The Machine. Not having to move data back and forth should deliver major power and time savings. Memristor memory also can retain data when powered off, should be faster than RAM, and promises to store more data than comparably sized hard drives today. The Machine’s design includes other novel features such as optical fiber instead of copper wiring for moving data around. HP’s simulations suggest that a server built to The Machine’s blueprint could be six times more powerful than an equivalent conventional design, while using just 1.25 percent of the energy and being around 10 percent the size. HP’s ideas are likely being closely watched by companies such as Google that rely on large numbers of computer servers and are eager for improvements in energy efficiency and computing power, says Umakishore Ramachandran, a professor at Georgia Tech. That said, a radical new design like that of The Machine will require new approaches to writing software, says Ramachandran. There are other prospects for reinvention besides HP’s technology. Companies such as Google and Facebook have shown themselves to be capable of refining server designs. And other new forms of memory, all with the potential to make large-scale cloud services more efficient, are being tested by researchers and nearing commercialization (see “Denser, Faster Memory Challenges Both DRAM and Flash” and “A Preview of Future Disk Drives”). “Right now it’s not clear what technology is going to become useful in a big way,” says Steven Swanson, an associate professor at the University of California, San Diego, who researches large-scale computer systems. HP may also face skepticism because it has fallen behind its own timetable for getting memristor memory to market. When the company began working to commercialize the components, together with semiconductor manufacturer Hynix, in 2010, the first products were predicted for 2013 (see “Memristor Memory Readied for Production”). Today, Bresniker says the first working chips won’t be sent to HP partners until 2016 at the earliest. source: www.technologyreview.com |

Nenhum comentário:

Postar um comentário

Observação: somente um membro deste blog pode postar um comentário.